About Me

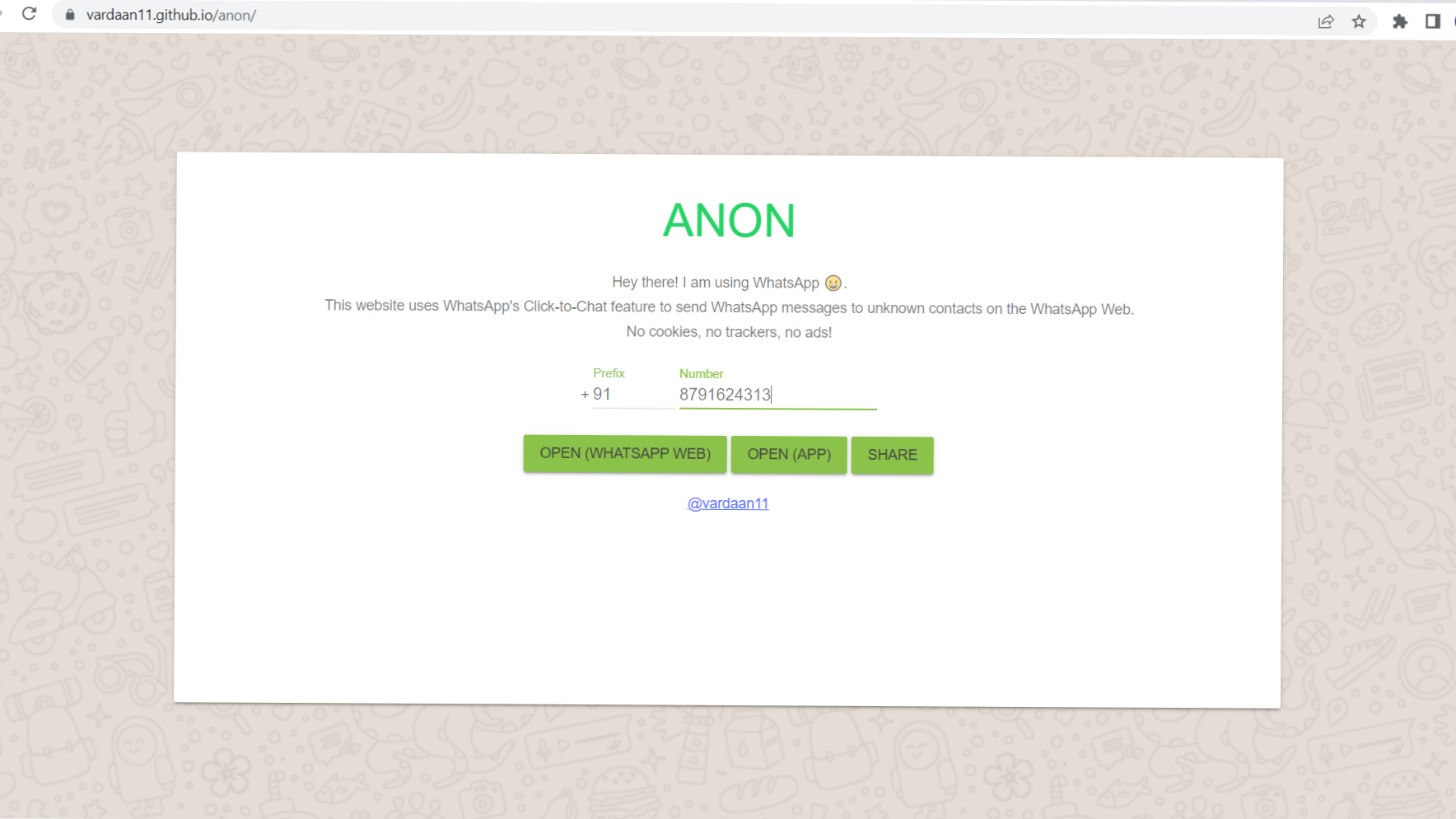

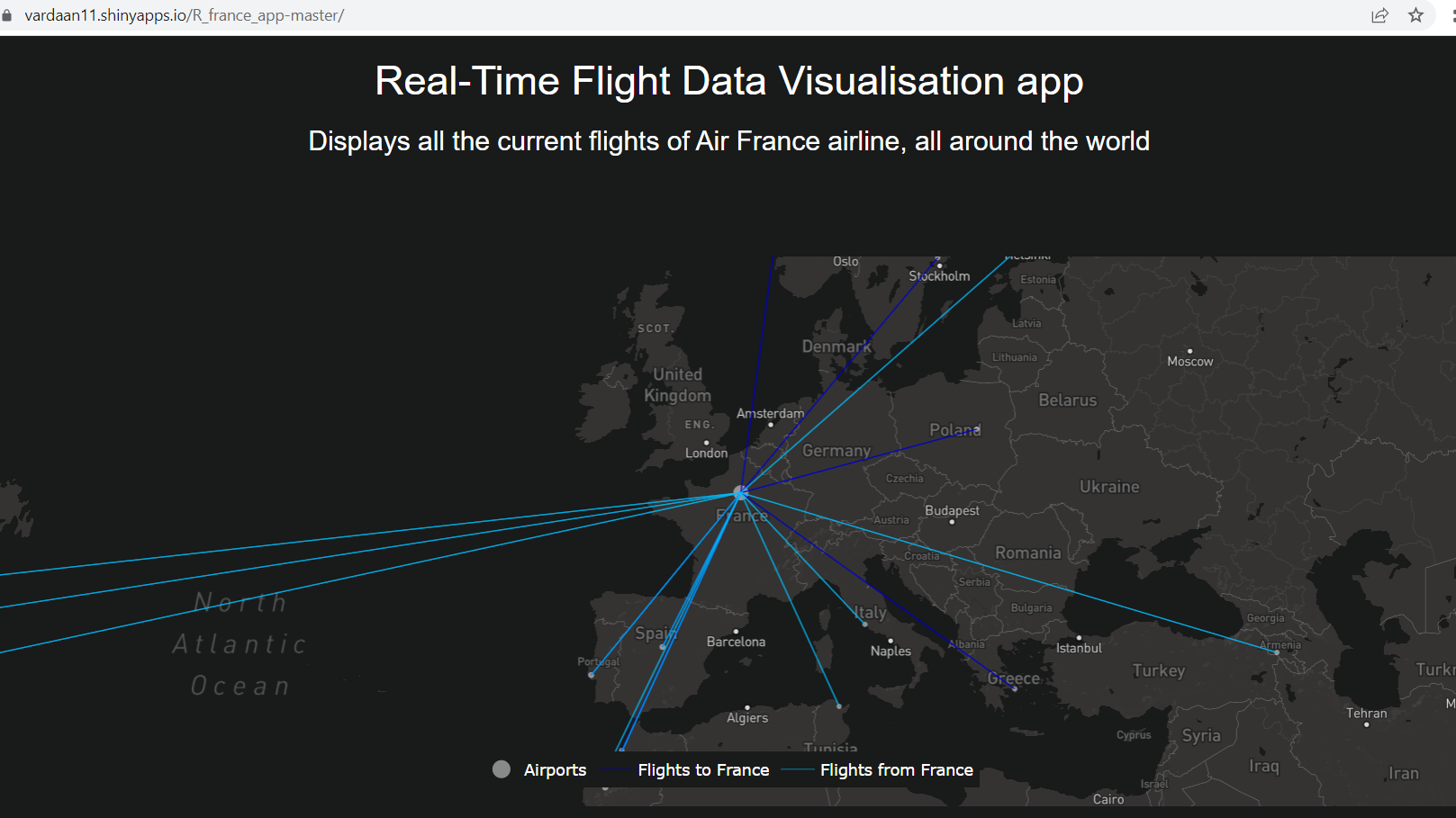

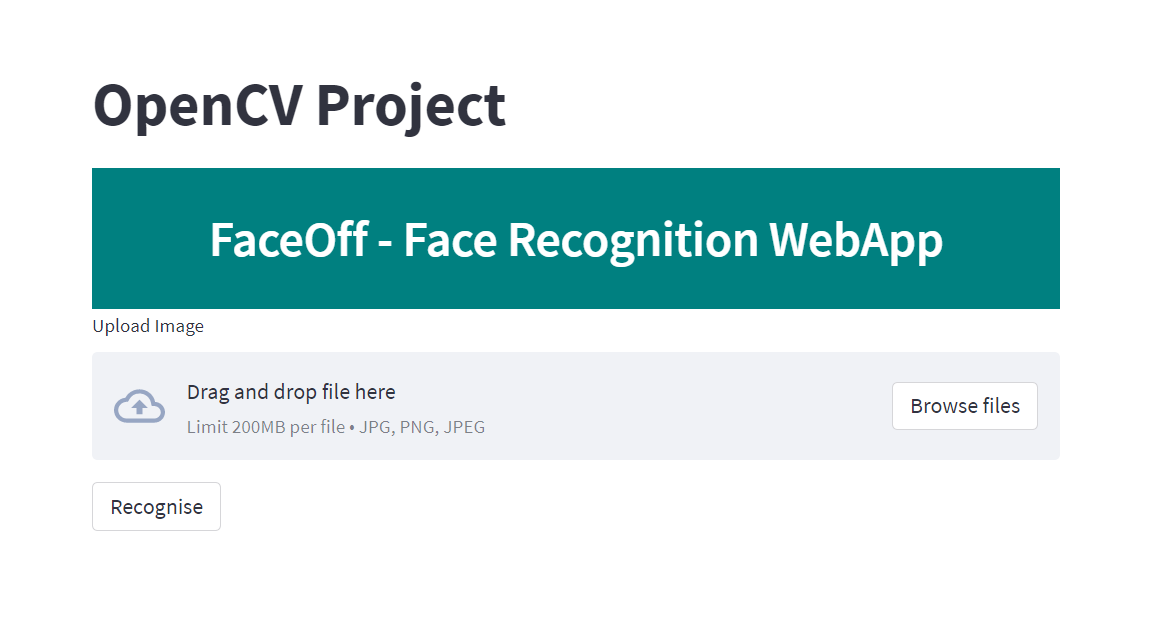

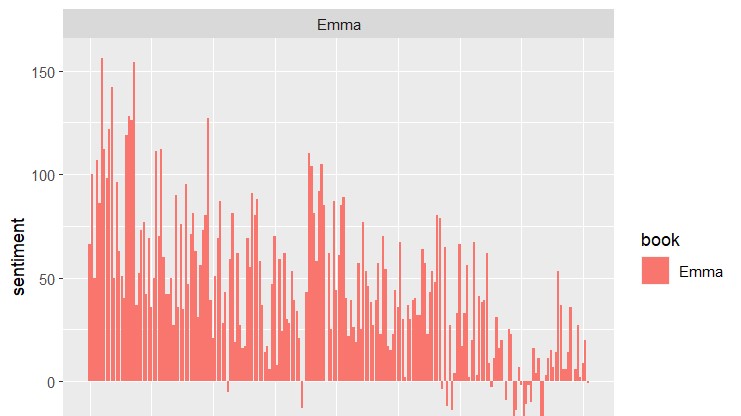

My name is Vardaan. I am currently enrolled in VIT Bhopal University as a final-year student, pursuing Computer Science Engineering. I undertook this domain out of my curiousity and interest in computers and how they function. Over the years at my university, I have had the privelege to study and explore various fields like Computer Vision, Data Analytics, Web-Dev etc.

- Name: Vardaan Vishnu

- Age: 21

- Occupation: Upcoming intern @ PwC India

I have been part of various team projects, wherein we focused on solving real-world problems. Apart from academics, I was a Core-Member of Meraki, the Fine-Arts club of my university, and had organised various cultural events too. I was also a Tech-Columnist for The Frontier Vedette. In my free-time, I love to watch movies and read books.